Abstract

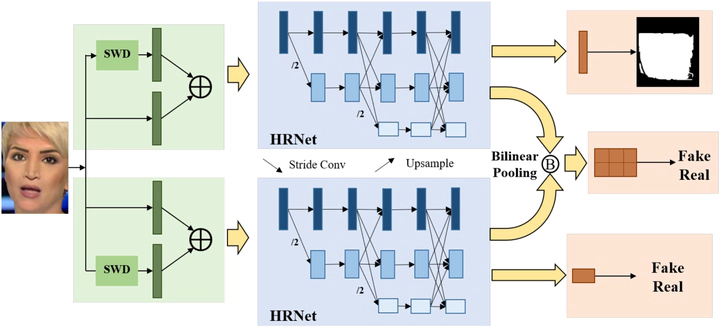

Current face forgery techniques can generate high-fidelity fake faces with extremely low labor and time costs. As a result, face forgery detection becomes an important research topic to prevent technology abuse. In this paper, we present an inconsistency-aware wavelet dual-branch network for face forgery detection. This model is mainly based on two kinds of forgery clues called inter-image and intra-image inconsistencies. To fully utilize them, we firstly enhance the forgery features by using additional inputs based on stationary wavelet decomposition (SWD). Then, considering the different properties of the two inconsistencies, we design a dual-branch network that predicts image-level and pixel-level forgery labels respectively. The segmentation branch aims to recognize real and fake local regions, which is crucial for discovering intra-image inconsistency. The classification branch learns to discriminate the real and fake images globally, thus can extract inter-image inconsistency. Finally, bilinear pooling is employed to fuse the features from the two branches. We find that the bilinear pooling is a kind of spatial attentive pooling. It effectively utilizes the rich spatial features learned by the segmentation branch. Experimental results show that the proposed method surpasses the state-of-the-art face forgery detection methods.